How we’ve scaled enterprise AI at BVNK

Our four-pillar framework for scaling AI without sacrificing speed or security.

“AI is the new electricity,” Andrew Ng famously said in 2017. This feels like the reality nowadays. But for financial institutions, this electricity comes with a hazard warning.

In a highly regulated environment, how do you empower every employee with AI without leaking sensitive financial data? Is it possible to move at the speed of a startup while maintaining the security standards of a bank?

Many companies are paralyzed by this paradox and end up effectively banning innovation because of (understable) compliance fears.

Financial institutions in particular face a unique challenge: regulators, data sovereignty requirements, and audit trails demand governance that most AI tooling wasn't designed for.

At BVNK, we've proven that with the right guardrails, you can have both.

The turning point in AI adoption

Machine learning used to be the exclusive domain of tech giants, built by scarce data scientists over long development cycles. The evolution of GPT model, culminating in the November 2022 release of GPT-3.5, was a breakthrough in accessibility, bringing conversational AI to millions. This changed everything.

Ironically, while big enterprises once held the monopoly on AI, they initially fell behind individual users in adopting LLMs (Large Language Models).

But 2025 brought a fundamental shift. LLMs are now fueling agentic thinking – AI that doesn't just answer questions, but takes actions. Many compare this shift to the Industrial Revolution. Change is the only certainty: those who adapt fastest will come out on top.

The BVNK advantage: culture as an accelerator

Before discussing our technical pillars, let's first address our advantage: organizational culture.

In many companies, AI adoption stalls due to internal friction, compliance paralysis, security fears, or a lack of business buy-in for essential infrastructure. At BVNK, the story is different. Success wasn't mandated from the top; it was unlocked by leaders focused on removing barriers from their teams, from day one.

As a result, our adoption isn't limited to developers. Colleagues in Product, Compliance, Legal, and Support are actively leveraging AI to reshape their workflows. This company-wide embrace is fast becoming a key competitive advantage.

After culture comes our technical pillars, and we've identified four that have enabled us to scale AI within BVNK.

.png)

Pillar 1: Developer agent coding

Tools like Claude Code and Cursor are the “low-hanging fruit”. But developers must now understand the agentic nature of these tools – specifically how to manage context effectively without bleeding costs or performance.

Context Engineering: This is crucial for managing infrastructure costs. Too little context causes "amnesia" (the AI forgets what it's working on); too much makes LLMs slow and expensive. We've found that thoughtful context design is the difference between a 10x productivity multiplier and a 1x marginal improvement.

Methodology: Plan-mode and specification-driven development yield the best results. Rather than prompting the AI to "build this feature," our teams now write detailed specifications that guide the AI's reasoning, as you'd brief a senior engineer.

Persistent memory: We see great potential in maintaining implementation history for future coding interactions, similar to concepts in AgentOS. This prevents agents from starting from scratch every time, creating a cumulative "team memory."

Standardization: Unifying code standards via Claude Code plugins is a major upgrade. It ensures that AI-generated code consistently aligns with company best practices, eliminating the need for extensive code review cycles.

Pillar 2: Operations automation

Multimodal LLMs can now execute tasks, not just generate recommendations. But success depends on choosing the right orchestration engine for the job.

The tooling: We use n8n for quick, visual internal workflows. However, for complex backend logic requiring multi-step reasoning and state management, we rely on LangGraph. We also use Temporal for fixed, non-AI workflows that don't require reasoning.

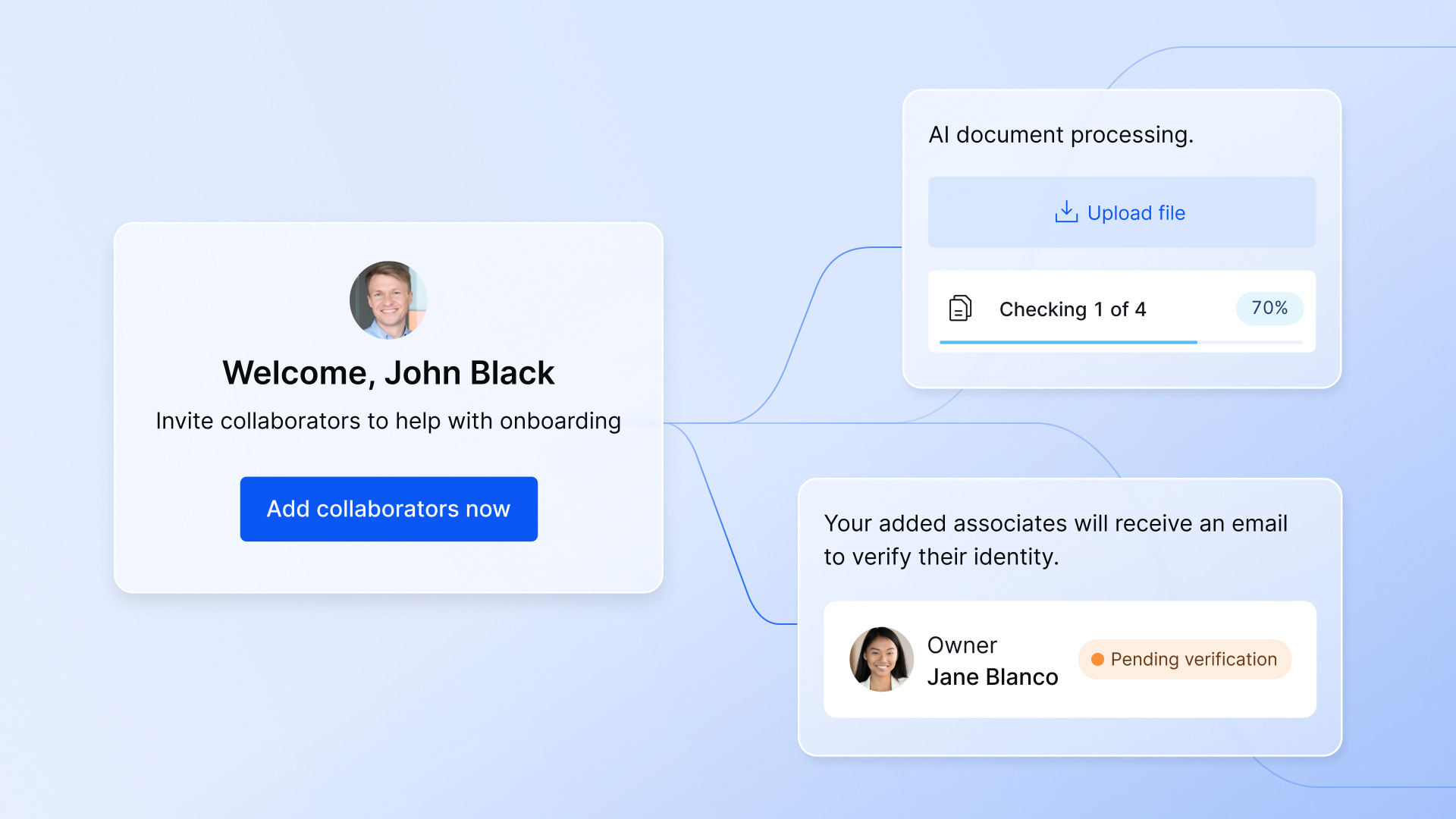

The use case: Our onboarding compliance agents now process identity documents, bank statements, and regulatory form, extracting validating, and flagging exceptions faster than humans, while generating a complete audit trail for regulators.

The trust gap: The critical challenge was making automation transparent enough that our Ops team could explain decisions to auditors. This is where governance becomes non-negotiable. We've implemented checkpoints where high-stakes actions require human approval, and every decision is logged with reasoning.

Pillar 3: Product integration

The immediate win here is the AI Assistant – giving users conversational access to their data via RAG (Retrieval-Augmented Generation). But the next evolution is Invisible AI

Beyond Chat: Not every AI feature needs a chat box. The most powerful implementations often run silently in the background to remove friction.

A prime example is document parsing and prefilling: when a customer uploads an identity document or bank statement, the AI instantly extracts the relevant data and autofills the necessary forms, eliminating tedious manual entry.

Other examples include automatic onboarding review, detecting anomalies, or enriching transaction data without the user ever typing a prompt.

Fluid experience: Users care about results, not the technology. Whether it's a visible Assistant or an invisible process running in the background, the goal is a seamless experience that removes friction and accelerates outcomes.

Pillar 4: Trust, governance & evaluation

As we move from chatbots to agents that can execute tasks, "hoping it works" is no longer a strategy. This safety pillar requires a dedicated security stack.

Data Sovereignty & NDAs: Free models often train on your data. We strictly operate under clear NDAs with providers or deploy Sovereign AI (self-hosted models) to ensure our IP and customer data never leak into public training sets. This is non-negotiable for a financial services company.

Guardrails & Access: Tools like LiteLLM are essential for unifying model access and enforcing strict guardrails against threats like prompt injection.

Observability, evaluation & security: We rely on solutions like Langfuse to trace agent reasoning using "LLM-as-a-judge" and deterministic testing to automatically score performance, detect regression, uncover vulnerabilities. Plus, private MCP registries allow us to govern tool usage company-wide.

Human-in-the-loop: We implement checkpoints where agents propose high-stakes actions, but humans approve them.

Our foundation: infrastructure & observability

Underpinning all four pillars is robust infrastructure. We're discussing rapid changes and cutting-edge tools, but sound architectural practices – observability, cost management, and latency control – are still the backbone that makes this innovation possible.

Without strong foundations, even the best frameworks collapse under scale.

The leaders who will win in 2026 aren't those who banned AI due to compliance concerns, nor those who deployed it recklessly. They're the ones who built governance into their DNA from day one. At BVNK, we've proven this is possible.

The choice isn't between security and speed. With disciplined infrastructure, a governance mindset, and a culture that empowers teams to innovate responsibly, you can get both.

What’s next for BVNK

This adoption strategy is essential to scale the BVNK product and build upon our performance in 2025. By combining a culture of disciplined innovation with a four-pillar framework, encompassing developer agents, operations automation, product integration, and robust governance, BVNK is moving beyond hype to operationalize enterprise AI at scale.

Latest news

View allGet payment insights straight to your inbox

.avif)

.avif)

.avif)